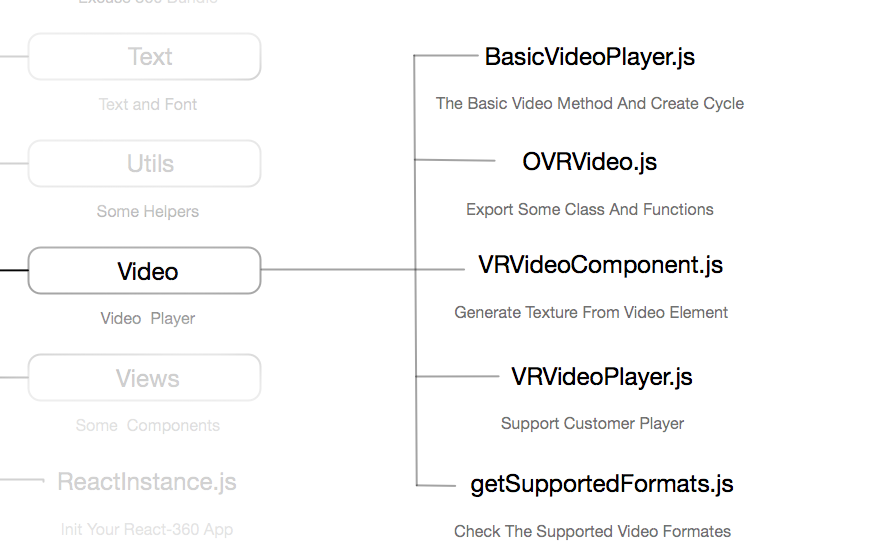

前面我先看了 React-360 源码阅读【5】- 核心 Compositor 里的内容,其中里面涉及了 video 作为背景的内容,今天分析一下 video 目录里面的内容;实际上我们如果要设置全景视频,可以直接通过调用;

// Creating a Video Player

const player = r360.compositor.createVideoPlayer('myplayer');

// Set the video to be played, and its format

player.setSource('path/to/video.mp4', '2D');

当然,这里我们主要分析 video 目录下的文件都做了什么事情。

getSupportedFormats.js

const FORMATS = {

ogg: 'video/ogg; codecs="theora, vorbis"',

mp4: 'video/mp4; codecs="avc1.4D401E, mp4a.40.2"',

mkv: 'video/x-matroska; codecs="theora, vorbis"',

webm: 'video/webm; codecs="vp8, vorbis"',

};

let supportCache = null;

export default function getSupportedFormats() {

if (supportCache) {

return supportCache;

}

const video = document.createElement('video');

supportCache = [];

for (const type in FORMATS) {

const canPlay = video.canPlayType(FORMATS[type]);

if (canPlay.length && canPlay !== 'no') {

supportCache.push(type);

}

}

return supportCache;

}

OVRVideo.js 就是对当前目录下的函数和一些类的对外暴露,这里不分析了。

BasicVideoPlayer.js

定义需要对外暴露的事件, 如果事件大家不熟悉 video 的基本事件化,可以去看 https://developer.mozilla.org/en-US/docs/Web/Guide/Events/Media_events 的定义

const MEDIA_EVENT_TYPES = [

'canplay',

'durationchange',

'ended',

'error',

'timeupdate',

'pause',

'playing',

];

/**

* The basic video player

*/

export default class BasicVideoPlayer {

onMediaEvent: ?(any) => void;

videoElement: HTMLVideoElement;

_muted: boolean;

_volume: number;

/**

* Subclasses may use this property to define the video format

* the video player supports. e.g. If a video player defined

* `supportedFormats = ['mp4']`, when playing a .webm format video,

* VRVideoComponent will fall back to use other video player.

*/

static supportedFormats: ?Array<string> = null;

constructor() {

this.videoElement = document.createElement('video');

this.videoElement.style.display = 'none';

// 防止移动端的全屏,如果微信的化需要我们自己去设置

this.videoElement.setAttribute('playsinline', 'playsinline');

this.videoElement.setAttribute('webkit-playsinline', 'webkit-playsinline');

// video 是 append 到 body 尾部的

if (document.body) {

document.body.appendChild(this.videoElement);

}

this._volume = 1.0;

this._muted = false;

this.onMediaEvent = undefined;

(this: any)._onMediaEvent = this._onMediaEvent.bind(this);

}

// 主要设置 video 的 source

initializeVideo(src: string, metaData: any) {

this.videoElement.src = src;

this.videoElement.crossOrigin = 'anonymous';

this._bindMediaEvents();

this.videoElement.load();

}

// 确认是否可以继续播放

hasEnoughData(): boolean {

return (

!!this.videoElement && this.videoElement.readyState === this.videoElement.HAVE_ENOUGH_DATA

);

}

主要是将 video 触发的事件外发

_bindMediaEvents() {

MEDIA_EVENT_TYPES.forEach(eventType => {

this.videoElement.addEventListener(eventType, this._onMediaEvent);

});

}

_unbindMediaEvents() {

MEDIA_EVENT_TYPES.forEach(eventType => {

this.videoElement.removeEventListener(eventType, this._onMediaEvent);

});

}

_onMediaEvent(event: any) {

if (typeof this.onMediaEvent === 'function') {

this.onMediaEvent(event);

}

}

setVolume(volume: number) {

this.videoElement.volume = volume;

}

setMuted(muted: boolean) {

this.videoElement.muted = muted;

}

play() {

this.videoElement.play();

}

pause() {

this.videoElement.pause();

}

seekTo(position: number) {

this.videoElement.currentTime = position;

}

// 销毁 video

dispose() {

this.pause();

if (document.body) {

document.body.removeChild(this.videoElement);

}

this.videoElement.src = '';

this._unbindMediaEvents();

this.onMediaEvent = undefined;

}

}

大概是对一个基本 player 的封装。

大概就是对视频编码方式的探测,返回支持的格式,去年写了一篇比较详细的文章《探测浏览器对 video 和 audio 的兼容性》,关于使用 canPlayType()。

VRVideoPlayer.js

import BasicVideoPlayer from './BasicVideoPlayer';

import getSupportedFormats from './getSupportedFormats';

import type {VideoDef} from './VRVideoComponent';

const _customizedVideoPlayers: Array<Class<BasicVideoPlayer>> = [];

let _customizedSupportCache: ?Array<string> = null;

/**

* 获取当前 的 player

*/

export function getVideoPlayer(videDef: ?VideoDef): Class<BasicVideoPlayer> {

for (let i = 0; i < _customizedVideoPlayers.length; i++) {

const player = _customizedVideoPlayers[i];

const format = videDef ? videDef.format : null;

// Here we use == to compare to both null and undefined

if (

player.supportedFormats == null ||

format == null ||

player.supportedFormats.indexOf(format) > -1

) {

return player;

}

}

return BasicVideoPlayer;

}

/**

* 添加自定义实现的 player

*/

export function addCustomizedVideoPlayer(player: Class<BasicVideoPlayer>) {

_customizedVideoPlayers.push(player);

}

// 获取自定义的 video 格式支持

export function getCustomizedSupportedFormats(): Array<string> {

if (_customizedSupportCache) {

return _customizedSupportCache;

}

_customizedSupportCache = getSupportedFormats();

for (let i = 0; i < _customizedVideoPlayers.length; i++) {

const player = _customizedVideoPlayers[i];

if (player.supportedFormats) {

const supportedFormats = player.supportedFormats;

for (let j = 0; j < supportedFormats.length; j++) {

if (_customizedSupportCache.indexOf(supportedFormats[j]) < 0) {

_customizedSupportCache.push(supportedFormats[j]);

}

}

}

}

return _customizedSupportCache;

}

VRVideoComponent.js

import {getVideoPlayer} from './VRVideoPlayer';

import * as THREE from 'three';

import type {Texture} from 'three';

interface VideoPlayer {

initializeVideo(src: string, metaData: any): void,

dispose(): void,

hasEnoughData(): boolean,

}

export type VideoDef = {

src: string,

format: ?string,

metaData: any,

};

export default class VRVideoComponent {

onMediaEvent: ?(any) => void;

videoDef: ?VideoDef;

videoPlayer: ?VideoPlayer;

videoTextures: Array<Texture>;

constructor() {

this.videoPlayer = null;

this.videoTextures = [];

this.onMediaEvent = undefined;

(this: any)._onMediaEvent = this._onMediaEvent.bind(this);

}

/**

* @param videoDef definition of a video to play

* @param videoDef.src url of video if the streamingType is none

*/

setVideo(videoDef: VideoDef) {

this._freeVideoPlayer();

this._freeTexture();

this._setVideoDef(videoDef);

this.videoPlayer = new (getVideoPlayer(this.videoDef))();

this.videoPlayer.onMediaEvent = this._onMediaEvent;

// video 纹理贴图 大概 360 视频渲染的原理就是取到的祯的图像,然后绘制到 three.js 对象上

const texture = new THREE.Texture(this.videoPlayer.videoElement);

texture.generateMipmaps = false;

texture.wrapS = THREE.ClampToEdgeWrapping;

texture.wrapT = THREE.ClampToEdgeWrapping;

texture.minFilter = THREE.LinearFilter;

texture.magFilter = THREE.LinearFilter;

// For rectlinear and equirect video, we use same texture for both eye

this.videoTextures[0] = texture;

// Uncomment when we support stereo cubemap.

//this.videoTextures[1] = texture;

if (this.videoDef) {

const videoDef = this.videoDef;

if (this.videoPlayer) {

this.videoPlayer.initializeVideo(videoDef.src, videoDef.metaData);

}

}

}

_setVideoDef(videoDef: VideoDef) {

this.videoDef = {

src: videoDef.src,

format: videoDef.format,

metaData: videoDef.metaData,

};

}

_onMediaEvent(event: any) {

if (typeof this.onMediaEvent === 'function') {

this.onMediaEvent(event);

}

}

// 销毁掉当前的 video player

_freeVideoPlayer() {

if (this.videoPlayer) {

this.videoPlayer.dispose();

}

this.videoPlayer = null;

}

// 销毁掉这些 textures

_freeTexture() {

for (let i = 0; i < this.videoTextures.length; i++) {

if (this.videoTextures[i]) {

this.videoTextures[i].dispose();

}

}

this.videoTextures = [];

}

// 更新 texture

frame() {

if (this.videoPlayer && this.videoPlayer.hasEnoughData()) {

for (let i = 0; i < this.videoTextures.length; i++) {

if (this.videoTextures[i]) {

this.videoTextures[i].needsUpdate = true;

}

}

}

}

dispose() {

this._freeVideoPlayer();

this._freeTexture();

this.onMediaEvent = undefined;

}

}

这样下去大家是不是对 videoPlayer 有了一个比较好的理解了。